BVLC Retreat

Every now and then, an opportunity arises to participate in events that preview advances in deep learning and computer vision. One such event was the Berkeley Vision and Learning Center (BVLC) retreat, where the department showcases the depth and breadth of its department. The industry attendance was significant including Siemens, Samsung, Huawei, Yahoo, Adobe, Intel, Amazon A9 and others.

A beautiful day at the Berkeley CS department. Berkeley University is a “college on a hill” possessing a heaping portion of idyllic charm.

The spirit, if not focus of the retreat, tended towards deployment and robotics systems. To quote Prof. Darrell, systems must be “articulate, adaptable, and actionable”. The BVLC folks have a long view where recent advances in deep learning (DL) such as image captioning are stepping stones to more autonomous robotics systems. Advances in multi-modality (e.g. image to text github) DL systems are natural requirements of systems that interact with humans in natural and fluent fashions.

The Gelato Bet: a wager between Prof. Malik and Prof. Efros on performance of DL methods for labeling subregions of an image belong to a category. Scientific wagers certainly have a history and romanticism in the name of advancing science.

The Gelato Bet: a wager between Prof. Malik and Prof. Efros on performance of DL methods for labeling subregions of an image belong to a category. Scientific wagers certainly have a history and romanticism in the name of advancing science.

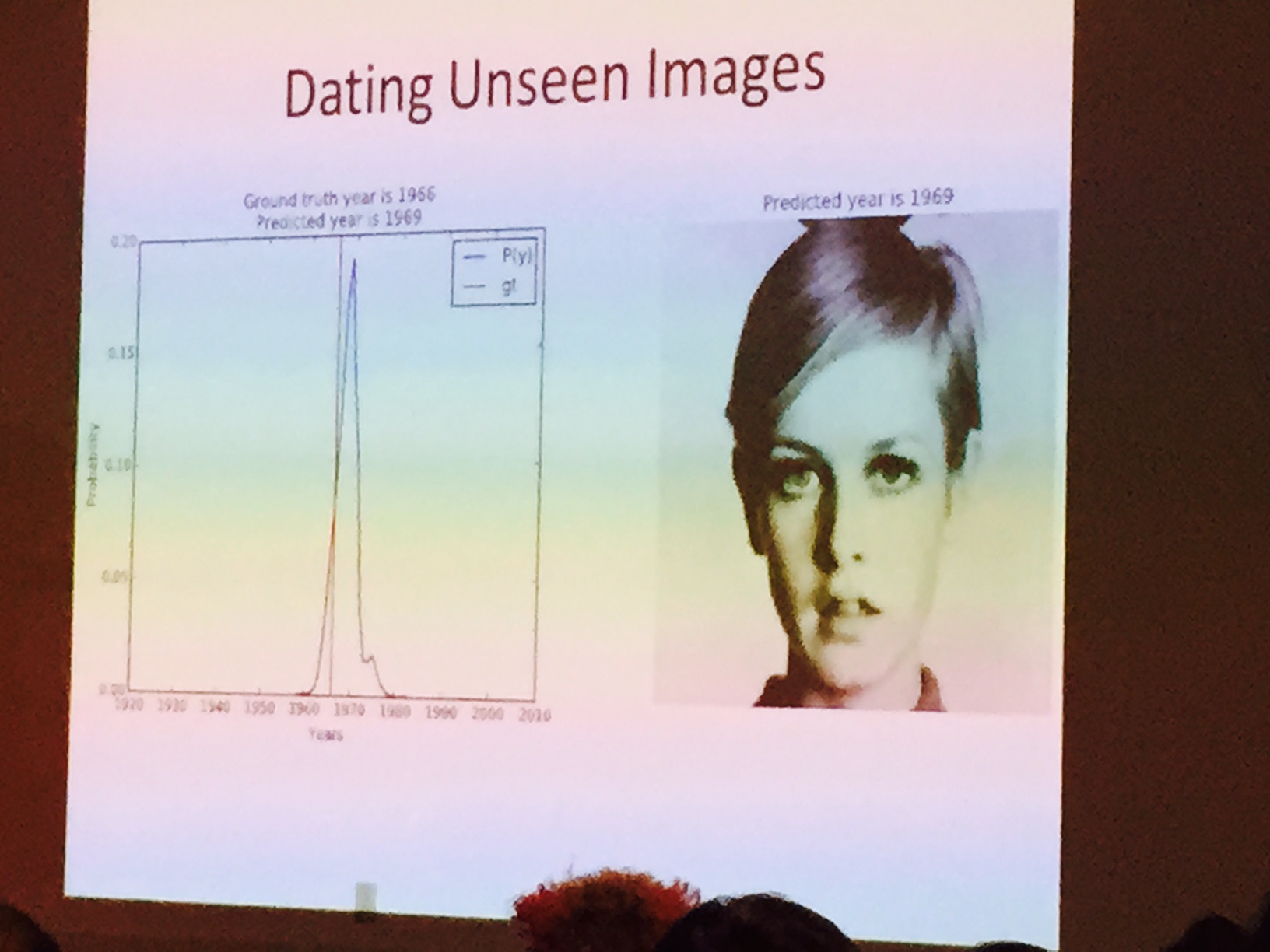

On the pop culture side, BVLC continues to drive into the public imagination. Prof. Efros unveiled a system to automatically date yearbook pictures, achieving near-expert levels of performance. It’s an ingenious application using a large dataset (yearbook pictures having a history into the early 1900’s), which is labeled by definition. Using visualization techniques on DL networks (a strength of these approaches), features such as smiles and hair styles could be displayed.

Predicting the date of unseen yearbook photos proved to be an apt application of DNN. Computer vision continues to improve on human-centric visions tasks.

Predicting the date of unseen yearbook photos proved to be an apt application of DNN. Computer vision continues to improve on human-centric visions tasks.

The BVLC retreat married deep learning further with robotics systems where they continue to provide leaps in performance for difficult tasks.